MIT Hack diaries: Sunshine Cove team on hacking

By Tiffany Schmidt, Naraly Serrano, Elena Palombini, Anissa Lopez, Brian Poole

April 03, 2024

In January 2024, about 500 technology enthusiasts traveled to MIT campus to attend the 2024 Reality Hack where participants have two and a half days to create projects using extended reality technology. Here is the story how our team – Sunshine Cove hacked our way to come up with a project that would be innovative and meaningful using Snapdragon Spaces XR Developer Platform.

It took the whole first day of hacking to go through iterations and hoping to land on a final concept. Our final project idea came when when teammate Nari shared her experience as a first-year math educator of maintaining a “memory box” of items like students’ drawings, appreciative emails from parents, or notes about light-hearted classroom moments to improve mental well-being. We started dreaming up what this could look like in the virtual world for someone who may not be able to share physical keepsakes. Our users could be a long-distance couple or an elderly person disconnected from their family members because of health concerns.

Sunshine Cove MR experience invites users to craft a personalized virtual space where memories come to life. The cove acts as a canvas, allowing users to interact with the spatial collection of pictures, videos, notes, and audio recordings, keeping cherished moments within reach. What makes Sunshine Cove truly special is its collaborative feature: the ability to invite friends and family to upload to the virtual haven through an app on their phone, fostering a sense of connection and shared joy.

We knew we wanted to focus on a Mixed Reality project from the beginning. Mixed reality (MR) is defined as the combination of a virtual environment and the real world, in which it is possible to interact with digital objects, whereas augmented reality (AR) consists of a non-interactable overlay of digital elements onto the physical world. What attracted us to MR is the ability to maintain connection and visibility to the world around us, while still experiencing and interacting with virtual 3D objects and environments. Sunshine Cove’s focus on strengthening one’s happy memories is always connected to a vision of enrichment, not providing a completely different life or an escape, so it made sense for us to place our application in the context of the user’s physical world.

We were excited to develop on the Lenovo ThinkReality A3 smart glasses, which use the Snapdragon Spaces SDK, for a variety of reasons:

- They’re lightweight and easy to wear, more so than most XR headsets, and at first glance, they look like sunglasses. As smart glasses’ form factor continues to improve, we could see this technology becoming more usable and taking hold.

- The headset provides optical passthrough, so the user can see the real world accurately without lag common in other XR headsets.

- Dual Render Fusion feature opened up interesting possibilities, like making use of familiarity with mobile phone interaction to make experiences more intuitive or augmenting an already existing app with an immersive environment. These capabilities allowed to envision our project to be more accessible, enabling collaboration also by people who didn’t have the smart glasses but only a smartphone.

- Most members of the team had never built a project with these smart glasses, so it was a unique opportunity for us to try this dev kit out, with the support of the mentors from Qualcomm Technologies, Inc. who were very present and helpful!

Challenges of using Lenovo ThinkReality A3 Smart Glasses and Snapdragon Spaces

- Limited field of view – we needed to make sure objects are the right size and at a suitable distance to limit cutoff.

- The need to quickly learn all the different parts of an unfamiliar.

- There was one instance when the project validator wasn’t describing issues as it was supposed to, which caused confusion.

- The smart Glasses drained the phone battery extremely fast causing loss of work time.

Things we learned during our first hackathon and will remember in the future:

- Have willingness to consider all perspectives and concepts

- Be quick on ideation – choose a solid idea sooner to have more time to create

- Know the parameters of the product you are using – research products before the hack

- Don’t underestimate the importance of time management

- Take short walks every so often to clear your mind

- Expect technical issues and bugs

- Keep a positive attitude even when things don’t go the way you planned

Sunshine Cove is a product of our hard work, passion, and perseverance as a team. We are incredibly grateful for the opportunity MIT’s 2024 Reality Hack offered to us as we made connections with others in the field and immersed ourselves in the future of XR technology. Thank you to the Snapdragon Spaces team for allowing MIT hackers to use their product and for their support in person. We often found ourselves being helped by the team members. We will continue to push boundaries and think outside of the box as we are hopeful for the future of tech and how it continues to change our future.

Snapdragon branded products are products of Qualcomm Technologies, Inc. and/or its subsidiaries.

MIT Hack diaries: Community Canvas team on winning the track

By Tom Xia, Yidan Hu, Joyce Zheng, Mashiyat Zaman, and Lily Yu

March 05, 2024

Introduction

Hi! We’re the team behind Community Canvas, this year’s winners of the Snapdragon Spaces track at MIT Reality Hack. We’re a group of graduate students from the Interactive Telecommunications Program at NYU, where we’ve been working on anything and everything from building arcades to creating clocks out of our shadows. It was our first time participating in Reality Hack, so we were blown away by all the tech we had at our disposal, and incredible ideas that the teams around us came up with for them.

Our project Community Canvas, developed using Snapdragon Spaces developer kit using Lenovo ThinkReality smart glasses, is an AR application allowing users to redesign shared urban spaces with customized 3D assets. It includes a data platform providing insights on submissions for local governments, facilitating participatory budgeting to address community needs. We wanted to take you through our journey putting this idea together – it all started the afternoon of the opening ceremony, in Walker Memorial at MIT..

Thursday

Tom

As we stepped into the Reality Hack Inspiration Expo, we were struck by the number of sponsors showcasing an array of AR/VR headsets. It was a tech enthusiast’s dream come true. Among them, Snapdragon Spaces stand caught our eye, where the Lenovo ThinkReality A3 glasses lay in wait.

At first glance, the ThinkReality A3 seemed unassuming, closely resembling a pair of standard sunglasses but with a slightly thicker frame and bezels. The cable connecting the glasses to a smartphone piqued my curiosity about its capabilities. Slipping on the A3, the lightness of the frame was a surprise. A closer inspection revealed a second layer of glass nestled behind the front panel, hinting at its holographic projection capabilities.

The Snapdragon Spaces team demonstrated the built-in map navigation through both a traditional 2D phone screen and an immersive 3D visualization via the AR glasses. Manipulating the map on the phone with simple pinches to zoom and rotate, I watched as a three-dimensional landscape of buildings and trees came to life within the headset, confined only by a circular boundary. The seamless transition between 2D and 3D, paired with the intuitive control system, was a revelation. This experience challenged my preconceived notions about spatial navigation in VR, which typically involved either controller raycasting or somewhat awkward hand gesture tracking. The tactile feedback of using a touch screen in tandem with the visual immersion offered by the glasses created a rich, spatially aware experience that felt both innovative and natural. One aspect that prompted further inquiry was the glasses’ tint. It seemed a bit too dark, slightly obscuring the real world. In conversation with the Snapdragon Spaces team, I discovered the glasses’ lenses could be easily removed, allowing for customization of their opacity to suit different environments and preferences.

My overall initial encounter with the Lenovo ThinkReality A3 smart glasses was profoundly inspiring. It underscored the incredible strides being made in wearable XR technology, achieving a level of lightweight flexibility that seemed futuristic just a few years ago.

Friday

Yidan

On the first day of the hackathon, our team embarked on a journey of innovation fueled by collaboration and a shared vision for change. Initially, our focus gravitated towards conventional methods, that lead us to pondering their viability within the realm of XR technology. It was through our interactions with the team members of Snapdragon Spaces, that we truly began to grasp the potential of XR technology to impact lives in meaningful ways. The team encouraged us to leverage XR technology to transcend the constraints of the physical world, opening up possibilities for enhanced living experiences.

With diverse backgrounds and cultures represented within our team, we started to explore how XR technology could address real-world challenges. Drawing from my film production background, I raised the inefficiencies and costs associated with traditional film and television venue construction. Could XR technology streamline this process, allowing crews to visualize and plan sets digitally before physical construction? Meanwhile Tom, with roots in architecture and a connection to Shanghai’s disappearing heritage, pondered how XR could be used to preserve and communicate the stories of vanishing landmarks.

As discussions unfolded, two key themes emerged: community and communication. Armed with these guiding principles, we delved into research, exploring how Snapdragon Spaces technology could facilitate meaningful connections and empower communities.

Our team came up with two innovative solutions aimed at addressing community needs and fostering collaboration:

- AR Co-Design App:An augmented reality application that empowers users to reimagine and redesign spaces using customizable 3D assets. Whether it’s revitalizing a local park or transforming a vacant lot, this app enables communities to visualize and share their visions for change.

- Data Platform for Participatory Budgeting: A comprehensive platform that facilitates participatory budgeting to allow local communities allocate resources based on feedback. By providing insights and analytics on submitted proposals, this platform ensures transparency and accountability in decision-making processes.

After having these ideas, we immediately set to work, each team member contributing their expertise in different areas. Drawing from our diverse backgrounds, we divided tasks and collaborated to bring our vision to life. With a shared sense of purpose and determination, we were fueled by the belief that our solutions could truly make a difference in communities.

Saturday

Mashi

Having the Snapdragon Spaces team at the next table over was our lifesaver – with us the whole day, fielding all our troubleshooting questions. Designing for two user interfaces – both the phone and the smart glasses, each a different orientation – was quite unintuitive. In our drafts, we considered how we could ensure that the elements in either didn’t interfere with the other. For example, what if we thought of the phone interface as a remote control using familiar interactions like pressing and swiping, so that users could focus on experiencing the scene in front of them through the glasses?

While Lily and Tom investigated questions like these, modeling our ideas on Figma, Joyce and I wrestled with a more technical hurdle – how do we even implement these designs in Unity? In our combined experience developing in Unity so far, we never had to think about the user potentially looking at (or through) two screens at once! Luckily, the Snapdragon Spaces team was there to save the day. They recommended using the new Dual Render Fusion feature, which allows us to edit simultaneous renderings on both the mobile screen and smart glasses through the Unity editor. The team showed us that all we had to do to start using it was import a package from the Developer Portal and add another Game view matching the aspect ratio of our device to the Unity editor. If only all AR development hurdles could be resolved with a few clicks!

Joyce

When we began the programming, we decided to leverage existing examples from the Dual Render Fusion package. Time constraints meant we needed to optimize our development process, and the insights we gained from the Snapdragon Spaces mentors were invaluable. They recommended using ADB (Android Debug Bridge) to wirelessly deploy our app to the phone, allowing us to test changes without plugging in our phone. Despite sluggish internet speeds due to the high volume of participants at the hackathon, we were able to iterate on our app more efficiently.

One of the primary objectives of our application is to empower users to redesign shared urban spaces using customized 3D assets. To achieve this, we delved into asset generation, exploring options that would grant users freedom in creating their own 3D assets. We settled on Meshy AI, a platform that offers text-to-3D model AI generation. For our prototype, we provided users with preset options, laying the foundation for real-time model generation. Currently, we’re working on bridging the gap between Unity and Meshy’s text-to-model API to enable real-time model generation. This integration will allow for personalized 3D asset creation – a significant step forward in our mission to give users more control.

Sunday

Lily

After pencils down on Sunday afternoon, we entered the judging session of the hackathon. The room buzzed with energy during the initial round, as judges interacted with groups presenting their ideas. The judges approached us with stopwatches and listened attentively to our pitch. In the brief intervals, we also took the opportunity to showcase our project to other participating teams and friends who came to visit. To our delight, we received news shortly after the first round that we had advanced to the finalists.

Drawing from the experience of the initial round, we fine-tuned the delivery of our pitch, organizing it to begin with a concept introduction, followed by a user journey walk-through, and concluding with a prototype try-on. The final round of judging ran in a round-robin format, with each of the 11 judges rotating to the next group after 7-minute session. While physically taxing, this marathon of presentations allowed us to collect valuable insights from user testing on the Snapdragon Spaces dev kit employed for our application.

What’s next?

After getting so much feedback from judges, fellow hackers, and from the Snapdragon Spaces team themselves, we realized that our work wasn’t over – we want to bring Community Canvas closer to reality, as a tool for transparent decision-making in our cities. To that end, we’re refining some of our designs, researching other ways we can invite user creativity in our app, and joining other events, like the NYU Startup Bootcamp, to get more feedback on what we can improve.

Snapdragon branded products are products of Qualcomm Technologies, Inc. and/or its subsidiaries.

Introducing QR Code Tracking for Snapdragon Spaces

A much-requested feature is now live: QR Code tracking unlocks new opportunities for developers, expanding the possibilities of connected devices enhanced by Extended Reality experiences.

Jan 15, 2024

What is QR Code Tracking?

QR codes are everywhere, from restaurant menus to factory floors. When we see one, we instinctively know what to do: point our device camera, scan the code and get instant access to information. Scanning QR codes has also been a popular way to access augmented reality, virtual reality and mixed reality experiences, whether it’s to attend a virtual concert, join a WIFI network or get step-by-step instructions to fix your coffee machine. In addition to easy access, QR codes can also serve as a trigger for XR experiences complementing (or completely replacing, if required) image or object targets, while keeping fast and reliable recognition and tracking to initialize AR, VR and MR experiences. For developers, the simplified generation of QR Codes skips the hurdle of creating custom image targets and corresponding call to actions, while simultaneously encoding all necessary data to perform the XR-powered task. The universal QR code detection function further facilitates the development and maintenance of XR experiences, without the need for cloud services and frequent app updates. At its first iteration, the feature supports QR Code versions up to 10 (DIN ISO 18004), enabling recognition, detection and tracking from an approximate distance of 1 meter or more (for targets scaled at a sufficient minimum size) using Snapdragon Spaces supported devices. Developers can benefit from scanning and decoding functions (similar to QR Code reader apps on phones), as well as real time tracking in 6DOF, allowing for hands-free use cases and even collaborative applications with multi-users. “The recent addition of live QR code tracking from Snapdragon Spaces allows us at Sphere to provide users with a more seamless and precise user experience when it comes to spatial content and co-located collaboration in XR. It is also a great example of Qualcomm’s commitment to helping developers achieve their goals”, says Colin Yao, CTO at Sphere.

Why use QR Code Tracking in your XR experiences?

For the enterprise, Snapdragon Spaces will now unlock the possibility of scalable XR experiences, expanding the connectivity of machinery, factory floor and professionals. Using headworn devices, frontline workers can use QR Code Tracking to distinguish millions of uniquely identifiable instances while performing handsfree training, maintenance and remote assistance tasks. This feature can equally support customer-focused use cases such as augmented product packaging, XR product display with 3D models, interactive educational materials, XR-powered showrooms and more. QR Code Tracking can be combined with other features available on the Snapdragon Spaces SDK, such as Local Anchors, Positional Tracking, Plane Detection and more. For example, QR Codes can be used both as a target and as an initial Spatial Anchor (when fixed on a position), helping the user and the engine get spatial orientation in the real world. Using QR Codes also allows companies to encode a variety of data formats like product serial numbers, machinery information, videos, URLs and more, which can trigger augmentations that will continuously be rendered in 3D. “With Snapdragon Spaces now supporting QR Code Tracking, this is an important feature for XR applications as it continues to be an easy way to position objects, trigger specific actions or align users in an XR environment. It’s a feature we support with our XR streaming technology and it will make life easier for XR users” adds Philipp Landgraf, Senior Director XR Streaming at Hololight.

Next steps

SDK 0.19 is now available for download at the Snapdragon Spaces Developer Portal. Check out our SDK changelog for Unity and Unreal to learn what’s new and get started with our QR Code Tracking sample. We’re excited to see how Snapdragon Spaces developers put this new feature to use and look forward to your feedback on Discord!

Snapdragon branded products are products of Qualcomm Technologies, Inc. and/or its subsidiaries.

China XR Innovation Challenge 2023: Pushing the Boundaries of XR Development

The China XR Innovation Challenge has become a highly anticipated event in the XR community, showcasing the talent and innovation of developers and OEM partners. The 2023 edition of the contest brought together a record-breaking number of participants, highlighting the rapid growth and advancement of XR technology in China. In this blog post, we recap the highlights of the event and celebrate the winning teams that pushed the boundaries of XR development.

November 27, 2023

Spanning several months (from April to September 2023), the China XR Innovation Challenge 2023 attracted a staggering number of 505 registration. This marked a significant increase compared to previous years, solidifying the contest’s position as the largest XR developer event in China. The event brought together developers, OEM partners, and XR enthusiasts, fostering collaboration and knowledge sharing among industry leaders. The event showcased the latest advancements in XR technology and featured a diverse range of contest tracks. Read on to learn about the key highlights of the event.

Contest Tracks:

Event organizers offered nine designated tracks, catering to various aspects of XR development. These tracks included XR Consumer, XR Enterprise, PICO MR Innovation, PICO Game, YVR Innovation, QIYU MR Innovation, Skyworth XR Innovation, and Snapdragon Spaces Tracks. A diverse range of tracks allowed participants to explore different areas of XR development and showcase their creativity and skills.

New for this year was a dedicated Snapdragon Spaces track that saw more than 40 entries. Selected developers were given development kits to work with which resulted in 18 Spaces apps submitted for consideration by judges. Contestants created their apps on a variety of development kits powered by Qualcomm XR processors:

Technical Webinars:

To support the contestants and provide valuable insights, the event organizers conducted a series of eight technical webinars. These webinars covered topics such as VR/AR registration, 3D engine utilization, VR game development, and VR sports fitness. The webinars attracted a significant number of attendees, totaling over 21.2K views, highlighting the thirst for knowledge and expertise in the XR community.

Awards Ceremony:

The contest culminated in a grand final awards ceremony held in Qingdao. The ceremony brought together 132 attendees, including contestants, judges, industry experts, and XR enthusiasts. Together, we celebrated the achievements of the participants and recognized the most impressive and inspiring projects in the XR field.

Winning Teams:

The contest team recognized 41 projects as the most impressive and inspiring in their respective tracks. These winning teams showcased their innovation, creativity, and technical expertise. Here are a few examples of the selected finalist projects:

-

Project 1: “Finger Saber” This project introduced a new gesture recognition experience in VR gaming, allowing players to control a lightsaber using hand gestures. The innovative control mechanism added a new level of immersion and interactivity to the gaming experience.

-

Project 2: “End of the Road” was a cross-platform multiplayer cooperative game set in a post-apocalyptic world. Players worked together as outlaws to destroy evil forces and protect valuable resources. The game showcased the power of collaboration and teamwork in an immersive XR environment.

-

Project 3: “EnterAR” was an AR tower defense shooting game that combined cartoon-style graphics with intuitive gameplay. Players defended a crystal from alien creatures using a bow and arrow, leveraging the power of AR technology to create an engaging and immersive experience.

Have a look at the video below for an overview of the winning projects:

It’s fair to conclude that China XR Innovation Challenge 2023 was a resounding success, showcasing the immense talent and innovation in the XR community. The event provided a platform for developers, OEM partners, and XR enthusiasts to come together, exchange ideas, and push the boundaries of XR development. The winning teams demonstrated the incredible potential of XR technology and its applications across various industries. As the XR landscape continues to evolve, events like this play a crucial role in driving innovation and shaping the future of XR in China.

Snapdragon branded products are products of Qualcomm Technologies, Inc. and/or its subsidiaries.

Qualcomm Joins the Mixed Reality Toolkit (MRTK) Steering Committee

Mixed Reality Toolkit (MRTK) was originally conceived as a cross-platform open-source project by Microsoft to provide common XR functionality to developers. You may have heard the announcement that MRTK has been moved to its own independent organization on GitHub headed by a steering committee. We’re excited to announce that Qualcomm Technologies, Inc., along with other ecosystem players, is joining this committee.

October 4, 2023

As leaders in the XR space with solutions like our Snapdragon Spaces™ XR Developer Platform, we look forward to shaping the future of MRTK to keep it alive and thriving, while expanding on its ecosystem. Snapdragon Spaces helps developers build immersive XR experiences for lightweight headworn displays powered by Android smartphones. Key features of Snapdragon Spaces include Positional Tracking, Local Anchors, Plane Detection, and more. This year we announced an extended support across XR spectrum, along with Dual Render Fusion feature designed to help developers transform their 2D mobile applications into spatial 3D experiences.

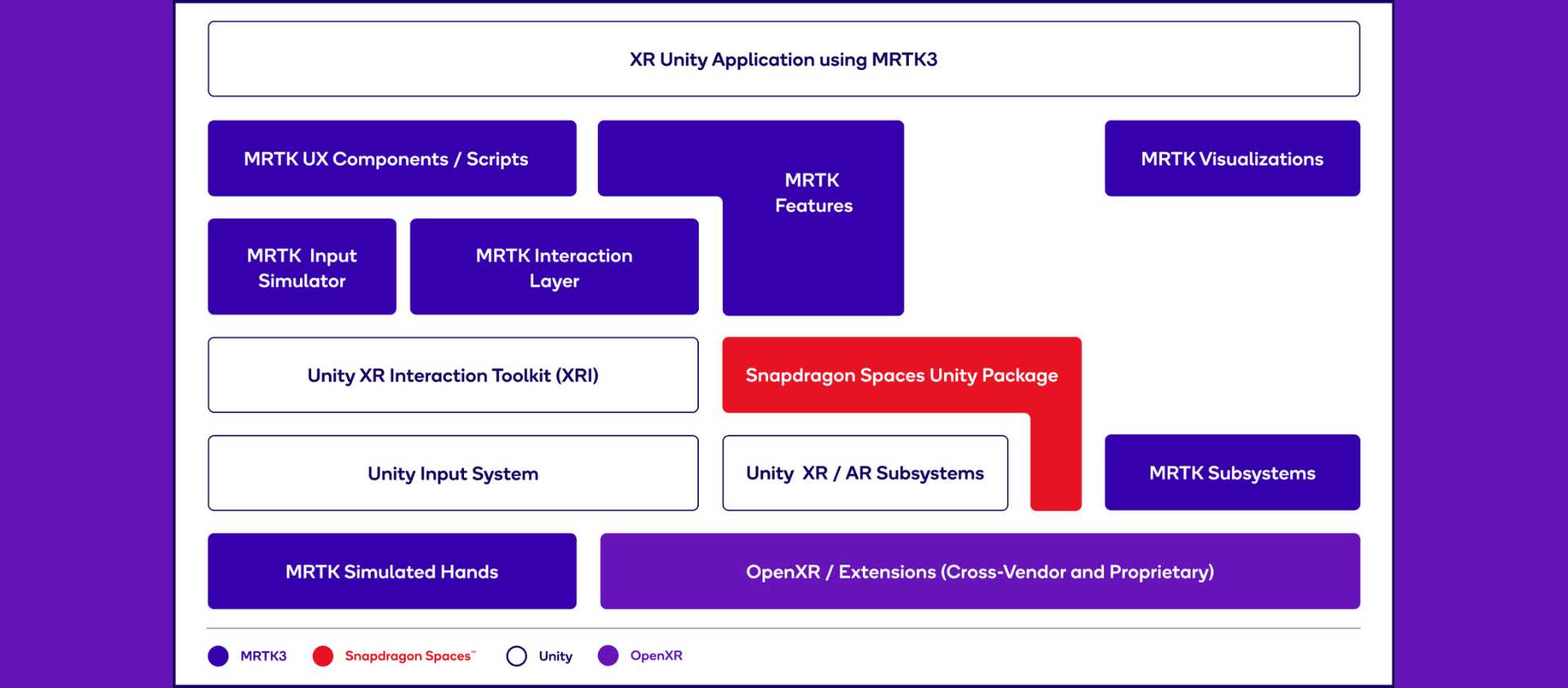

We have a vested interest in MRTK as it can be integrated into projects built with our Snapdragon Spaces SDK for Unity. MRTK is largely a UI and interaction toolkit while Snapdragon Spaces provides device enablement and support. In the following diagram, Snapdragon Spaces sits at the same layer as Unity’s XRI Toolkit and provides access to device functionality (e.g., subsystems) that MRTK can build upon.

There is a strong community around MRTK that spans hobbyists to enterprise, which enables rapid development, consistent UI, and cross-platform deployment. As part of this, we’re also excited to announce some of the original architects and project team members from the MRTK project have joined the Snapdragon Spaces team. Their knowledge and expertise in the XR domain is sure to help drive advancements in both MRTK and Snapdragon Spaces.

Pictured: Kurtis Eveleigh, Nick Klingensmith, Caleb Landguth, Isa Cantarero, Ramesh Chandrasekhar, Dave Kline, Brian Vogelsang, Steve Lukas

Qualcomm Technologies, Inc. is excited to be a part of MRTK’s steering committee and acquiring the new team members. Recently, the steering committee released GA on September 6, 2023. Going forward, Qualcomm Technologies, Inc. will continue to contribute code and functionality so that all stakeholders may benefit. We aim to ensure that the architecture maintains its cross-platform vision and promise, while providing best-in-class support for Snapdragon processors and Snapdragon Spaces.

For more information, check our documentation and our MRTK3 Setup Guide which shows how to integrate MRTK3 into a Unity project.

Snapdragon branded products are products of Qualcomm Technologies, Inc. and/or its subsidiaries.

Accelerating the future of perception features research

The Spatial Meshing feature enriches the way users interact with the 3D environment and provides better augmented reality experiences. As example, Spatial Meshing allows users to place virtual board games on top of a table and to drive virtual avatars to navigate through the environment. One of the vital ingredients for a good user experience when using Spatial Meshing is accurate and efficient 3D reconstruction. In this post, we briefly cover two of our recent public contributions to improve depth perception and 3D reconstructions, accepted for presentation in the International Conference on Computer Vision (ICCV).

September 29, 2023

Depth-Guided Neural 3D Scene Reconstruction

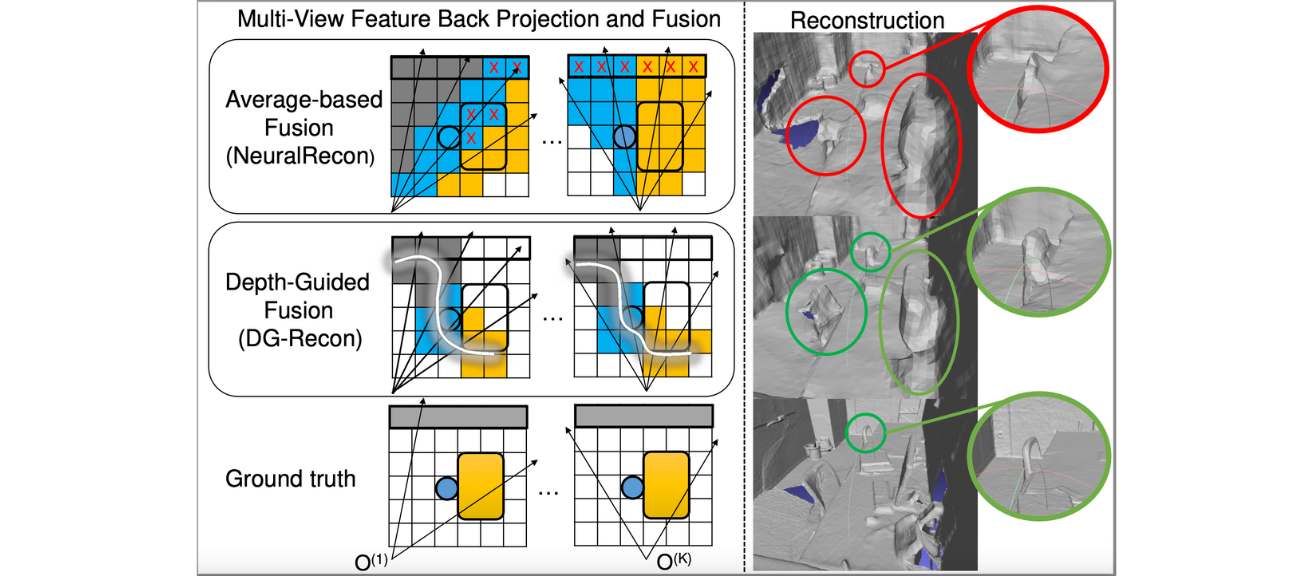

Without depth sensors, existing volumetric neural scene reconstruction methods (Atlasi, NeuralReconii, etc.) suffer from depth ambiguity when back projecting 2D features to 3D space. In this work, we propose to utilize depth priors, obtained from efficient monocular depth estimation, to guide the feature back-projection process. Figure 1 illustrates how depth guidance could reduce erroneous features and improve separation of objects in the reconstruction.

Figure 1. Feature back-projection without (Average-based) and with depth guidance.

Another common pitfall of volumetric-based scene reconstruction methods is the use of average for multi-view feature fusion. The average operation discards the cross-view consensus information which is critical to distinguish voxels on and off surfaces. We propose two alternative fusion mechanisms: the variance-based (var) fusion and the cross-attention-based (c-att) fusion. The non-learnable variance operator is shown to be as efficient as the average operator and delivers significantly better reconstruction. The learnable cross-attention module further improves the reconstructed geometry while being more efficient than the existing self-attention-based fusion modules.

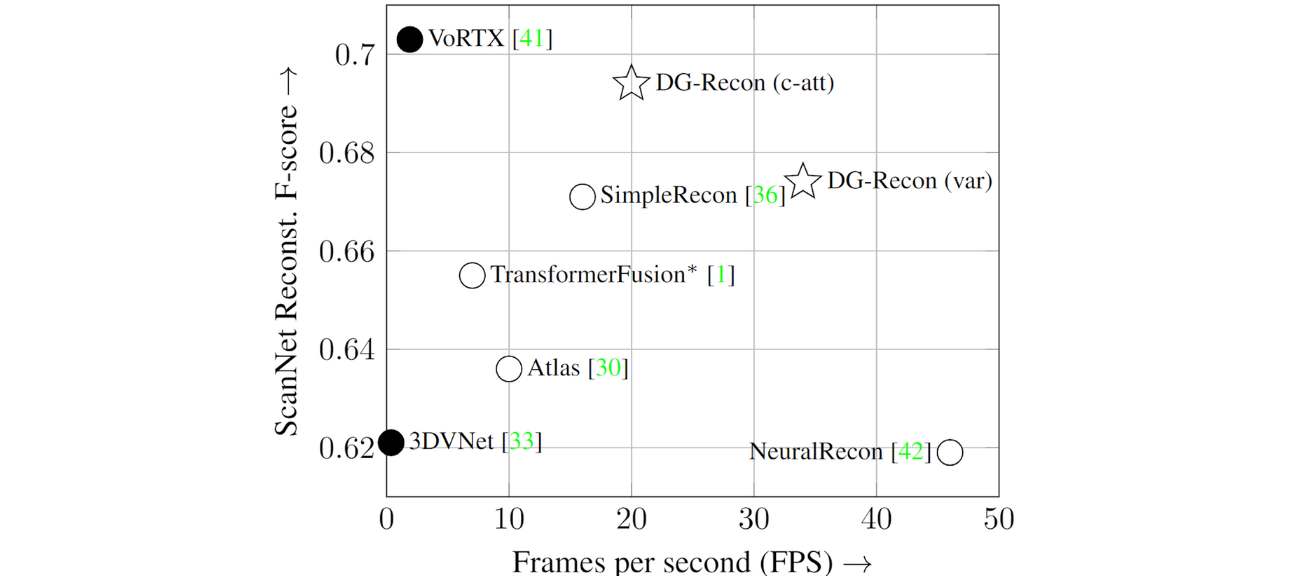

Figure 2. The accuracy-efficiency tradeoff for 3D reconstruction. Closed circles: offline methods; Open circles and stars: online methods.

Figure 2 compares the two variants of our method (DG-Recon) against the SOTA 3D reconstruction methods, including VoRTXiii, SimpleReconiv , TransformerFusionv and 3DVNetvi, in terms of the reconstruction F-score and frames per second. Our method achieves the best performance-efficiency trade-off among online methods and reaches F-score close to the SOTA offline method, VoRTX. The video below demonstrates a live reconstruction session with our method. The geometry inside the office room is incrementally updated and refined every 9 keyframes (approximately every 1.5 seconds).

Figure 3. Live reconstruction with DG-Recon.

For more technical details on this work, please refer to our ICCV23 paper: Jihong Ju, Ching Wei Tseng, Oleksandr Bailo, Georgi Dikov, and Mohsen Ghafoorian, “DG-Recon: Depth-Guided Neural 3D Scene Reconstruction”, International Conference on Computer Vision 2023.

Improved Self-supervised Depth Estimation on Reflective Surfaces

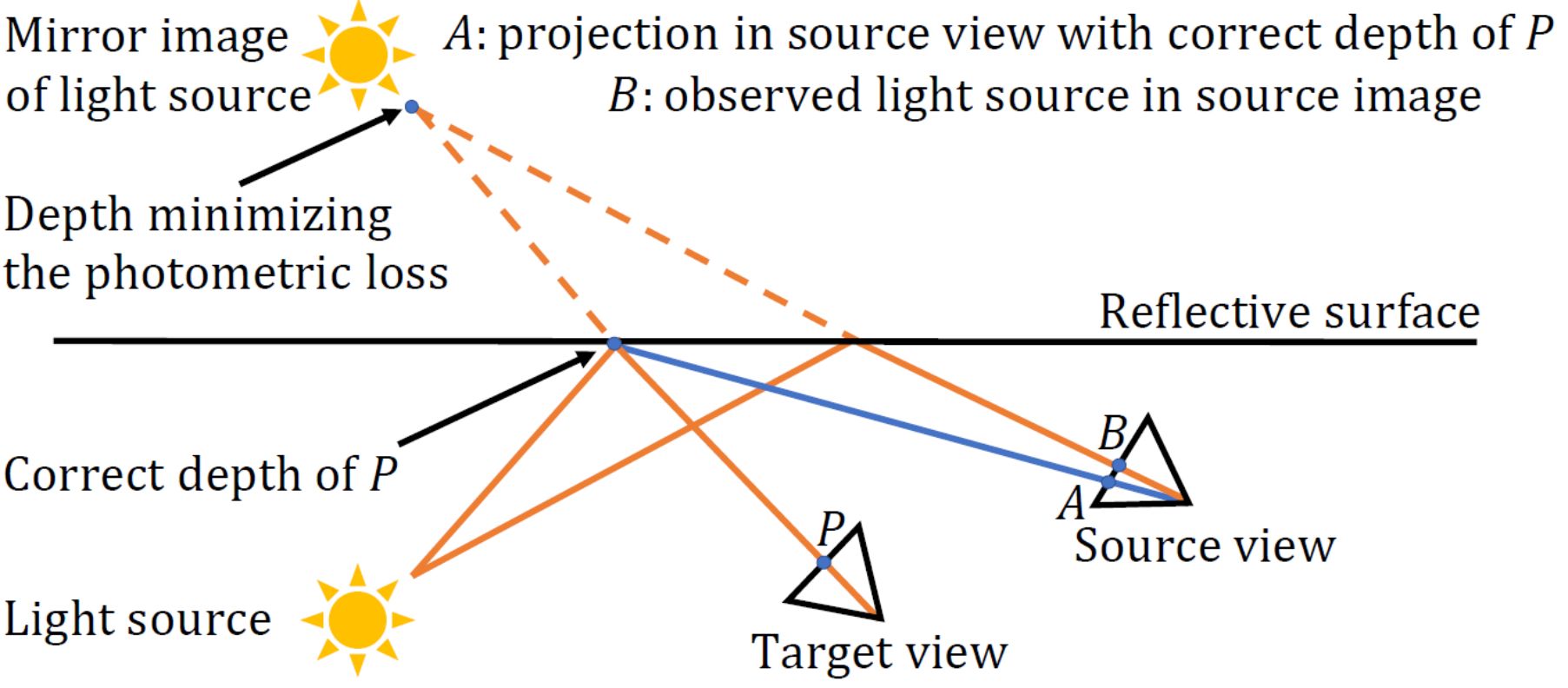

Requiring per-pixel ground-truth at large volume for getting a reliable supervised training is a laborious task that also depends on specialized hardware, e.g., LIDAR that limits the size and diversity of the data. Therefore, self-supervised training schemes have gained extensive attention, resulting in competitive self-supervised methods that mainly rely on photometric image reconstruction lossviii. However, the discrepancy between the optimization objective (photometric image reconstruction) and the actual test-time use (dense depth prediction) can sometimes result in degenerate solutions that satisfy the training objective but are not desirable from the the point of view of actual usage. One such example in this scenario is the behavior of self-supervised depth models on specular/reflective surfaces, where the models are observed to predict much larger depth values than the real surface distance on the specular reflections. Figure 4 depicts the reason why this happens, an example demonstrating such mis-predictions and how 3DDistillation (3DD), our proposed method published at ICCV23, resolves this issue.

Figure 4. An illustration of the reason for mis-prediction of self-supervised depth models on specular surfaces.

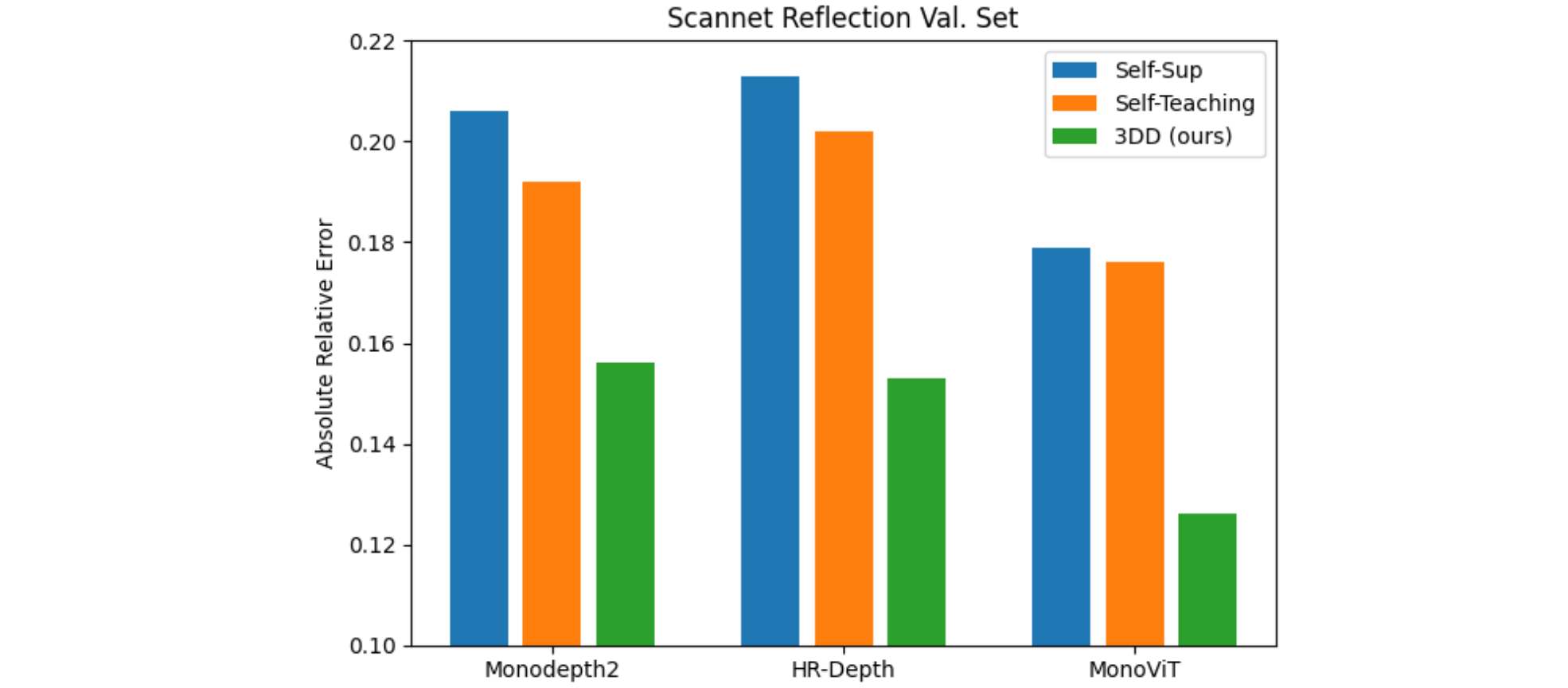

The main idea behind our proposed method is to bring further 3D prior in the training process through the 3D fusion of the predicted depth, projecting them back to the corresponding frames and using them as pseudo-labels to train the model; thus the name “3DDistillation”! As shown in quantitative and qualitative analysis (see Figures 5 and 6), this significantly rectifies the model’s behavior on the specular surfaces, while keeping the model still fully self-supervised with no requirement to sensory ground-truth. By experimenting with different model architectures (Monodepth2, HR-Depthix, MonoVITx ), we showed that our contributions are generic and agnostic to the underlying architecture.

Figure 5. Quantitative comparisons on Scannet Reflection Set with various backbone models.

Figure 6. Sample qualitative depth predictions and 3D reconstruction from 3D Distillation.

For more technical details on this work, please refer to our ICCV23 paper: Xuepeng Shi, Georgi Dikov, Gerhard Reitmayr, Tae-Kyun Kim, and Mohsen Ghafoorian, “3D Distillation: Improving Self-Supervised Monocular Depth Estimation on Reflective Surfaces”, International Conference on Computer Vision 2023.

Find out more about Spatial Meshing and its use cases.

The aforementioned contributions are just two examples of the many cutting-edge research projects we are conducting at XR Labs Europe. Our goal is to constantly push the boundaries of the perception feature’s quality that are offered through the Snapdragon Spaces XR Developer Platform. The team actively explores novel ideas and methodologies to further improve our methods and their accuracy and efficiency, and to enable a better experience for our XR technology end-users and developers.

i Murez, Zak, et al. “Atlas: End-to-end 3d scene reconstruction from posed images.” European Conference on Computer Vision 2020.

iiSun, Jiaming, et al. “NeuralRecon: Real-time coherent 3D reconstruction from monocular video.” Conference on Computer Vision and Pattern Recognition. 2021.

iiiStier, Noah, et al. “Vortx: Volumetric 3d reconstruction with transformers for voxelwise view selection and fusion.” International Conference on 3D Vision. 2021.

ivSayed, Mohamed, et al. “SimpleRecon: 3D reconstruction without 3D convolutions.” European Conference on Computer Vision. 2022.

vBozic, Aljaz, et al. “Transformerfusion: Monocular rgb scene reconstruction using transformers.” Advances in Neural Information Processing Systems. 2021

viRich, Alexander, et al. “3dvnet: Multi-view depth prediction and volumetric refinement.” International Conference on 3D Vision. 2021.

viiGodard, Clément et al., “Unsupervised monocular depth estimation with left-right consistency.” Conference on Computer Vision and Pattern Recognition. 2017.

viiiGodard, Clément, et al. “Digging into self-supervised monocular depth estimation.” International Conference on Computer Vision. 2019.

ixDai, Angela, et al. “ScanNet: Richly-annotated 3d reconstructions of indoor scenes.” Conference on Computer Vision and Pattern Recognition. 2017.

xLyu, Xiaoyang, et al. “Hr-depth: High resolution self-supervised monocular depth estimation.” AAAI Conference on Artificial Intelligence. 2021.

xiZhao, Chaoqiang, et al. “Monovit: Self-supervised monocular depth estimation with a vision transformer.” International Conference on 3D Vision. 2022.

Snapdragon branded products are products of Qualcomm Technologies, Inc. and/or its subsidiaries.

Meet some of the 80+ Companies in the Snapdragon Spaces Ecosystem

At AWE 2023 we announced that our Snapdragon Spaces Pathfinder Program has grown to over 80 member companies. This program helps qualifying developers succeed through early access to platform technology, project funding, co-marketing and promotion, and hardware development kits.

September 18, 2022

But it’s not just program members who make up the ecosystem behind our Snapdragon Spaces™ XR Developer Platform. Qualcomm Technologies is collaborating with an ecosystem of leading global operators and smartphone OEMs to bring headworn XR experiences to market across devices and regions around the world. In conjunction with 3D engine developers and content creators building immersive XR content, this ecosystem of companies is ushering in the next generation of XR headsets and experiences to the market.

Several of these companies have publicly announced how they’re collaborating with the platform and use Snapdragon Spaces to build apps for headworn XR. With so many projects spanning a wide range of verticals and use cases, you can’t help but become inspired. So, we decided to share the links to these announcements, organized by vertical, hoping they’ll inspire your next XR project.

Enterprise: Collaboration

-

Arthur Digital : Immersive collaborative products, including virtual whiteboards, screen sharing, and real-time spatial communication for distributed teams. Use cases include learning and development, and workshops.

-

Arvizio : Their AR Instructor product offers live see-what-I-see videos and interactive mark-ups in AR, enabling instructions to be overlaid in the user’s field of view.

-

Hololight : Company’s Stream SDK offloads real-time rendering of compute-intensive images in AR/VR apps to powerful cloud infrastructure or local servers.

-

Lenovo : Lenovo is working with several XR developers to make cutting-edge applications powered by Snapdragon Spaces technology available on the ThinkReality VRX. Use cases today include immersive training as well as collaboration in 3D environments.

-

Pretia : The company is porting its MetaAssist mobile app to AR glasses.

-

ScopeAR : Frontline workers use the company’s WorkLink AR app for employee training, product and equipment assembly, maintenance and repair, field and customer support, and more.

-

Sphere : The company creates AR environment where remote teams feel like they are working in the same room through highly-realistic avatars, real-time spatial-audio language translations, and integrations with conventional conferencing

-

Taqtile : Their Manifest application enables deskless workers to access AR-enabled instructions and remote experts to perform tasks more efficiently and safely. It supports an expanding number of heads-up displays (HUDs), so enterprise customers can select hardware platforms based on their requirements

Training

-

DataMesh : Digital twin content creation and collaboration platform makes digital twins more accessible to frontline workers and creators while addressing workflow challenges in training, planning, and operations.

-

DigiLens : Waveguide display technologies and headworn smartglasses for enterprise and industrial use cases, powered by the Snapdragon XR2 5G Platform .

-

Pixo VR : Simplifies access to and management of XR content. The platform can host any XR content, works on all devices, and offers a vast library of off-the-shelf VR training content.

-

Roundtable Learning : VR headsets for immersive enterprise training solutions.

-

Uptale : Immersive learning ranging from standard operating procedures and security rules to quality control and managerial situations, and onboarding.

Productivity

-

Nomtek : Their StickiesXR project is a Figma prototype that transforms traditional 2D workflows into XR experiences.

Education

-

AWE 2023 award winner PhiBonacci : Provides hands-on training that is safe, efficient, and impactful for learning and working in medical, engineering, and industry 4.0 fields.

WebXR

-

Wonderland: a development platform for VR, AR and 3D on the web, now built on Snapdragon Spaces to expand cooperation with platform and hardware providers.

-

Wolvic : Offers a multi-device, open-source web browser for XR.

Gaming and Entertainment

-

Mirroscape : MR technology and AR glasses to enable tabletop games in XR. Maps and miniatures are anchored to players’ tables just like real objects, providing them with unique and immersive views from virtually anywhere.

-

Skonec Entertainment : Develops XR content that enables efficient and sophisticated spatial experiences, particularly in XR game development.

Health, Wellness, and Fitness

-

Kittch: Provides interactive cooking instructions and other actions (e.g., set timers) using AR glasses, hand tracking, and eye tracking, thus freeing users’ hands to perform the necessary cooking steps.

-

XR Health : Immersive VR, AR, or MR healthcare experiences, including training and collaboration in 3D, on devices powered by Snapdragon.

Others

-

ArborXR: Provides XR management software to Qualcomm Technologies’ XR customers and OEMs. Organizations can manage device and app deployment and lock down the user experience with a kiosk mode and a customizable launcher.

-

Echo3D : Cloud-based platform that streamlines the distribution of XR software and services.

-

LAMINA1 : Blockchain optimized for the open metaverse, including novel approaches that leverage blockchain, NFTs, and smart contracts to bridge virtual and real-world environments (e.g., next-generation ticketing and loyalty programs).

-

OPPO MR Glass : OPPO MR Glass Developer Edition will become the official Snapdragon Spaces hardware developer kit in China.

-

TCL Ray Neo : The company will upgrade their RayNeo’s AR wearables with spatial awareness, object recognition and tracking, gesture recognition, and more, creating new possibilities in smart home automation, indoor navigation, and gaming.

Get Started

Initially released in June, 2022, and regularly updated (see our changelog) , Snapdragon Spaces provides developers with the versatility to build AR, MR, and VR apps across enterprise and consumer verticals. Developers, operators, and OEMs are free to monetize globally through existing Android-based app store infrastructure on smartphones. With proven technology and a cross-device open ecosystem, Snapdragon Spaces gives developers an XR toolkit to unlock pathways to consumer adoption and monetization.

You can get started on your headworn XR app today with the following resources:

- Free downloadable SDKs for Unity and Unreal

- Head over to the Snapdragon Spaces Documentation

- Get equipped with Hardware Development kits .

- Join our Developer Community Forum , Discord channel , and FAQs .

Snapdragon Spaces Developer Team

Snapdragon Spaces is a product of Qualcomm Technologies, Inc. and/or its subsidiaries.

Layering spatial XR experiences onto mobile apps with Dual Render Fusion

We are still buzzing with excitement around our announcement of Dual Render Fusion at AWE 2023 – the new feature of Snapdragon Spaces™ XR Developer Platform. We’ve got a number of developers already building apps with Dual Render Fusion and can’t wait to see what you will create. But first, let us show you how it works.

June 28, 2023

Think about transforming your 2D mobile application into a 3D spatial experience. Dual Render Fusion allows a Snapdragon Spaces based app to simultaneously render to the smartphone and a connected headworn display like the ThinkReality A3 included in the Snapdragon Spaces dev kit. In this setup, the smartphone can be used as a physical controller and a primary display (e.g., to render user interfaces and conventional 3D graphics). The smartphone is also connected to a headworn XR display which provides a secondary spatial XR view in real-time.

From a technical standpoint, the app now has two cameras (viewports) rendering from the same scene graph in a single Activity. The image below shows how developers can now enable two cameras in a Unity project with Dual Render Fusion by selecting each Target Eye in the Game Engine Inspector:

Once enabled, you can then preview the cameras. As shown below, Dual Render Fusion running in Unity on an emulator and how perception can be effectively layered onto the 2D smartphone experience.

The left viewport shows the 3D scene view in Unity. The middle shows a simulation of the primary (on-smartphone) display with the 3D scene and user interface controls. The right viewport simulates the rendering for the secondary (headworn XR) display, in this case, with simulated Hand Tracking enabled. The simplicity of this is that the cube can be manipulated by the smartphone touchscreen controls or by perception-based hand tracking without any networking or synchronization code required.

Layering on spatial experiences for your users

Today, users are comfortable with their current smartphone experiences. But just as the first smartphones drove a paradigm shift in human machine interaction (HMI), we’re now at an inflection point where XR is driving a shift towards spatial experiences which enhance users’ daily lives. That’s why we believe Dual Render Fusion is so important. You can use it to introduce your users to spatial concepts while taking full advantage of existing smartphone interactions. Apps can now be experienced in new immersive ways while retaining familiar control mechanisms. Best of all, your users don’t have to give up their smartphones. Instead, they can reap the benefits of spatial XR experiences that you’ve layered on.

A great example of this is Virtual Places by mixed.world , demoed at our AWE 2023 booth. Using Snapdragon Spaces and Dual Render Fusion, Virtual Places enhances conventional 2D map navigation with a 3D spatial view. Users can navigate maps with their smartphone while gaining visual previews of how an area actually looks. The multi-touch interface allows a familiar pinch and zoom interaction to manipulate the maps view on the phone, while a 3D visualization provides an immersive experience in the glasses.

Table Trenches by DB Creations , also demoed at our AWE 2023 booth, provided an example of a game that integrated Dual Render Fusion with relative ease.

DB Creations co-founder Blake Gross had this to say about the process: “…with Fusion, we are able to create experiences that anyone, without AR experience, can pick up and use by utilizing known mobile interaction paradigms. With Table Trenches, this was especially useful, because we were able to take the UI out of the world and into a familiar touch panel. Additionally, Fusion enables the smartphone screen to be a dynamic interface, so we can change how the phone behaves. We do this in Table Trenches when switching between surface selection and gameplay. Fusion was easy to integrate into our app since it uses the familiar Unity Canvas. Since our game was already built to utilize the Unity Canvas, it was as simple as setting up a new camera, and reconfiguring the layout to best fit the Snapdragon Spaces developer kit. We noticed at AWE how easy it was for new users to pick up and play our game without us needing to give any manual explanation of what to do.”

Developing With Dual Render Fusion

With the number of global smartphone users estimated at around 6.6 billion in 2031, there is a large market for mobile developers to tap into and offer new experiences. Snapdragon Spaces and Dual Render Fusion facilitate rapid porting of existing Unity mobile apps and demos to XR. You can create or extend existing 2D mobile applications built with Unity into 3D XR experiences with little or no code changes required just to get started. The general process goes like this:

- Create a new 3D or 3D URP project in Unity.

- Import the Snapdragon Spaces SDK and Dual Render Fusion packages.

- Configure the settings for OpenXR and Snapdragon Spaces SDK integration.

- Use the Project Validator to easily update your project and scene(s) for Dual Render Fusion with just a few clicks.

- Build your app.

You can read more about the process in our Dual Render Fusion Scene Setup guide for Unity.

Download now

Ready to start developing with Snapdragon Spaces and Dual Render Fusion? Get started with these three steps:

- Create a new account on the Snapdragon Spaces Developer Portal – it’s quick to sign up and free.

- Head over to the Snapdragon Spaces Documentation and download the latest Snapdragon Spaces packages.

- Check out the Dual Render Fusion topic to learn more about setup.

Snapdragon branded products are products of Qualcomm Technologies, Inc. and/or its subsidiaries.

AR’s inflection point: Dual Render Fusion feature is now available for mobile developers

Last week at AWE 2023, we introduced Dual Render Fusion – a new feature of Snapdragon Spaces™ XR Developer Platform designed to help developers transform their 2D mobile applications into spatial 3D experiences with little prior knowledge required.

June 6, 2023

What is Dual Render Fusion?

Snapdragon Spaces Dual Render Fusion enables smartphone screens to become a primary input for AR applications, while AR glasses act as a secondary augmented display. The dual display capability allows developers and users to run new or existing apps in 2D on the smartphone screen while showing additional content in 3D in augmented reality. In practical terms, a smartphone acts as a controller for AR experiences, letting users select what they want to see in AR using familiar mobile UI and gestures. Imagine you are using your go-to maps app for sightseeing. With Dual Render Fusion, you can use the phone as usual to browse the map and at the same time, see a 3D reconstruction of historical places in AR.

Why use Dual Render Fusion in your AR experiences?

The feature makes it easier for developers to extend their 2D mobile apps into 3D spatial experiences without creating a new spatial UI. It’s also the first time in the XR industry when AR developers get the tool to combine multi-modal input with simultaneous rendering to smartphones and AR glasses. With Dual Render Fusion, Unity developers with little to no AR knowledge can easily add an AR layer to their existing app using just a few extra lines of code. The feature gives more control over app behavior in the 3D space, significantly lowering the entry barrier to AR. But that’s not all – while using the feature, you have the option to utilize all available inputs enabled with the Snapdragon Spaces SDK, including Hand Tracking, Spatial Mapping and Meshing, Plane Detection, and Image Tracking, or go all in utilizing the convenience of the mobile touch screen for all input.

Why it is important?

It takes a lot of learning for developers to break into mobile AR and even more so to rethink what they already know to apply spatial design principles to headworn AR. The same applies to the end users as they need to get familiar with new spatial UX/UI and input. Enabling the majority of developers to create applications that are accessible to smartphone users will unlock the great potential of smartphone-based AR. The Snapdragon Spaces teams have been working hard to reimagine smartphone AR’s status quo and take a leap forward to fuse the phone with AR glasses. The Dual Render Fusion feature allows just that – to blend the simplicity and familiarity of the smartphone touch screen for input while leveraging the best of augmented reality. Dual Render Fusion unlocks smartphone-powered AR to its full potential, allowing us to onboard and activate an untapped market of millions of mobile developers. Hear our Director of XR Product Management Steve Lukas explain the vision behind the groundbreaking feature:

Download now

Dual Render Fusion (*experimental) is now available in beta as an optional add-on package for Snapdragon Spaces SDK for Unity version 0.13.0 and above. Download today and browse our Documentation to learn more. Don’t forget to share your feedback and achievements with our XR community on Discord.

Snapdragon branded products are products of Qualcomm Technologies, Inc. and/or its subsidiaries.

Introducing Spatial Mapping and Meshing

Our 0.11.1 SDK release introduced the Spatial Mapping and Meshing feature to the Snapdragon Spaces™ developer community. Read on to learn more about our engineering team approach, use cases and important factors to consider when getting started.

April 7, 2023

What is Spatial Mapping and Meshing?

Spatial Mapping and Meshing is a feature of Snapdragon Spaces SDK that provides users with an approximate 3D environment model. This feature is critical in helping smart glasses understand and reconstruct the geometry of the environment.

Spatial Mapping and Meshing offers both a detailed 3D representation of the environment surrounding the user and a simplified 2D plane representation. Meshing is needed to calculate occlusion masks or running physics interactions between virtual objects with the real world. Snapdragon Spaces developers can access to every element of the mesh (vertex) and request updates to it. Planes are used whenever just a rough understanding of the environment is enough for the application.

For example, when deciding to place an object on top of a table, or a wall. Only the most important planes are returned by the API, since there is a trade-off between how many planes can be extracted and how fast they can be updated with new information from the environment.

In addition to meshes and planes, developers can use collision check to see whether a virtual ray intersects the real world. This is useful for warning the user when they approach a physical boundary – either for safety or for triggering an action from the app.

Why use Spatial Mapping in your AR experiences?

A big part of how well users perceive your app lies in understanding the environment and adapting your application to it. If you strive to achieve higher realism for your digital experiences, Spatial Mapping and Meshing is the right choice. Why? As humans, we can understand the depth of space using visual cues that are spread around us in the real world. Occlusion is one of the most apparent depth cues – it happens when parts or entire objects hide from view, covered by other objects. Light also provides plenty of other natural depth clues – like shadows and glare on objects. Spatial Mapping and Meshing allows to emulate human vision and provide vital information to your augmented reality app to intake depth cues from the real world.

“The Sphere team has been incredibly excited for the Snapdragon Space’s release with Spatial Mapping and Meshing” says Colin Yao, CTO at Sphere. “Sphere is an immersive collaboration solution that offers connected workforce use-cases, training optimization, remote expert assistance, and holographic build planning in one turnkey application. As you can imagine, spatial mesh is a fundamental component of the many ways we use location-based content. By allowing the XR system to understand and interpret the physical environment in which it operates, we can realistically anchor virtual objects and overlays in the real-world” adds Yao. “Lenovo’s ThinkReality A3 smart glasses are amongst the hardware we support, and Sphere’s users that operate on this device will especially benefit from Spatial Mapping and Meshing becoming available.”

How does Snapdragon Spaces engineering team approach Spatial Mapping and Meshing?

Our engineering team’s approach to Spatial Mapping and Meshing is based on two parallel components – frame depth perception and 3D depth fusion. Read on to learn more about each.

Frame Depth Perception We leverage the power of machine learning by training neural networks on a large and diverse set of training data. Additionally, our data set benefits from running efficiently on the powerful Snapdragon® neural processor chips. To scale the diversity and representability of our training datasets, we do not limit the training data to supervised samples (e.g. set of images with measured depth for every pixel). Instead, we leverage unlabelled samples and benefit from self-supervised training schemes. Our machine learning models are extensively optimized to be highly accurate and computationally efficient compared to sensor-based depth observations – all thanks to a hardware-aware model design and implementation.

3D Depth Integration The 3D reconstruction system provides a 3D structure of a scene with a volumetric representation. The volumetric representation divides the scene into a grid of cells (or cubes) of equal size. The cubes in the volumetric representation (we’ll call them samples in this article) stores the signed distance from the sample centre to the closest surface in the scene. This type of 3D structure representation is called the signed distance function (SDF). Free space is represented with positive values that increase with the distance from the nearest surface. Occupied space is represented as samples with a similar but negative value. The actual physical surfaces are represented in the zero-crossings of the sample distance.

The 3D reconstruction system generates the volumetric representation by fusing and integrating depth images into the volumetric reconstruction. For each depth image, the system also requires its pose (camera location and viewing orientation) at the acquisition timestamp, correlated with a global reference coordinate system. The 3D reconstruction system also extracts a 3D mesh representation of the surfaces in the volumetric reconstruction. This is done using a marching cubes algorithm that looks for the zero iso-surface of signed distance values in the grid samples. 3D reconstruction in its entirety is a rather complex and resource-intensive operation. Enabling the mesh of the environment in your XR app brings benefits and feature possibilities that often make it worth the computational cost.

Enabling realism with Spatial Mapping and Meshing

Virtual Object Occlusion Creating augmented reality experiences without occlusion has been common practice for a long time. Having an approximate mesh of the environment can be used to create virtual objects that are occluded by real objects. By leveraging the depth buffer by rendering the environment mesh into the buffer, together with all other virtual objects in your scene, you can effortlessly create occlusion effects. To achieve this effect, you need to create a transparent shader that still writes into the depth buffer.

Virtual lighting and shadows in the real world Similarly, the mesh of the real environment can be used to apply virtual lighting effects to the real world. When creating a virtual light source without a mesh of the environment, only virtual objects will be lit by this virtual light. This can cause some visual discrepancies. When there is a model of the real world that is lit, the discrepancy will be less visible. Shadows again behave quite similarly. Without a model of the environment, shadows coming from virtual objects will not throw a shadow on the real world. This can cause confusion about the objects’ depth and even give users the impression that objects are hovering. To achieve this effect, you need to create a transparent shader that receives lighting and shadows like an opaque shader.

Real lighting and shadows in the virtual world When combining virtual and real-world content it’s often very easy to spot where a virtual object starts and reality ends. If your goal is to have the most realistic augmentations possible you need to combine real and virtual lighting and shadows. You can do this by having light estimation emulate the real-world lighting conditions with a directional light in your scene. Combined with meshing, light estimation allows you to throw shadows from real world objects onto virtual objects.

Limitations Depending on your hardware, there will be certain limitations to the precision of the detected mesh. For example, Lenovo ThinkReality A3 uses a monocular inference-based approach, which can yield certain imprecisions. Transparent objects (such as glass or glossy surfaces) will lead to fragmentations or a hole in the mesh. The further away a real-world object is from the glasses, the less precise the generated mesh for it will be. Quite logical if you think of it – the RGB sensor loses information with distance. The mesh is available for a distance up to 5 meters from the user. Other methods of depth measurement (such as LIDAR) require specialized hardware. There is a cost-effect trade-off. While LIDAR could provide a higher precision for some use cases, RGB cameras are available in most devices already and are more affordable.

Next steps

Refer to Snapdragon Spaces documentation to learn more about Spatial Mapping and Meshing feature and use Unity sample and Unreal sample to leverage Spatial Mapping and Meshing feature.

Snapdragon branded products are products of Qualcomm Technologies, Inc. and/or its subsidiaries.

Snapdragon Spaces at MIT Reality Hack

Joining the world’s biggest XR hackathon as one of the key sponsors is a big endeavour that comes with a big responsibility. The team brought a dedicated Snapdragon Spaces™ hack track, two hosted workshops and networking events, showcased demos – to help hackathon participants stretch the limits of their imagination.

FEBRUARY 27, 2023

With five days full of tech workshops, talks, discussions and collaborations, the MIT hackathon brought together thought leaders, brand mentors, students, XR creators and technology lovers who flew from all over the world to participate. The overall event had 450 attendees. There were 350+ hackers from 26 countries placed into 70+ teams; and the Snapdragon Spaces track had 10 teams. All skill levels were represented, and participants had the opportunity to explore the latest technology from Qualcomm Technologies and Snapdragon Spaces.

The Snapdragon Spaces team joined other event key sponsors at the opening day Inspiration Expo. Using the opportunity to connect with participants, we answered questions about the XR developer platform, encouraged hackers to join the hack, and showcased our “AR Expo” demo. The demo uses Snapdragon Spaces SDK features, letting hackers experience a variety of AR use cases – from gaming to media streaming and kitchen assistance apps. Later on, hackers had the opportunity to join Steve Lukas, Director of XR Product Management at Qualcomm Technologies, Inc., and Rogue Fong, Senior Engineer at Qualcomm Technologies, Inc., at the workshop dedicated to developing lightweight AR glasses experiences. This augmented reality-focused session educated participants on the features and capabilities of the Snapdragon Spaces XR Developer Platform.

Closer collaboration with hackers followed at the Snapdragon Spaces hack track, where the team hosted 50 participants in 10 teams in a dedicated area for hacking. The range of project ideas was truly impressive, spanning games, social good projects, and education.

While some projects leaned into the functionality of Lenovo ThinkReality A3 and Motorola edge+ phone HW devkit , others integrated additional hardware, such as 3D displays and neurofeedback devices.

Our track rewarded the most innovative, compelling and impactful experience using Snapdragon Spaces, with Stone Soup team taking the first prize for using AR technology to help the unhoused conceptualize, customize and co-create their dream homes.

The winning project executed their idea with a high level of polish, utilizing Snapdragon Spaces’ Plane Detection and Hit Detection perception technology, and integrated ESRI maps data into their project.

The runner-up, Skully resented a multipurpose education technology solution that brought up learning modules and 3D models when target images are located. The solution offers students a hands-on approach to learning and allows using Hand Tracking and Gaze Tracking to interact with lesson material. Skully is highly inclusive and offers those unfamiliar with interacting with AR learning modules in the form of more traditional learning formats (such as video).

Worthy of honorable mention is Benvision – the winner of “Working together for inclusion and equality” for enabling the visually impaired to experience the world through a combination of the Snapdragon Spaces 6DoF headset plus a bespoke real-time machine learning algorithm which turns landscapes into soundscapes. Also, Up in the Air team, who won in “Spatial Audio” category, introduced the app that turns a daily working space into a pleasing fantasy-style environment, using Hand Tracking for navigation and Spatial Anchors to anchor a workspace in user’s environment.

MIT Reality Hack 2023 left a long-lasting impression on all the participants. The opportunity to engage with key partners, industry leaders, and a broad XR developer community brought a new perspective into the use and future development of spatial computing. Our team left inspired by unforgettable collaboration, ideation and creativity of the hackers and will be working on incorporating their valuable product feedback in the next SDK releases.

Snapdragon Spaces Developer team in collaboration with Qualcomm Developer Network

Snapdragon Spaces is a product of Qualcomm Technologies, Inc. and/or its subsidiaries.